Sound Event Detection and Recognition in Autonomous Robot Navigation

Main Article Content

Abstract

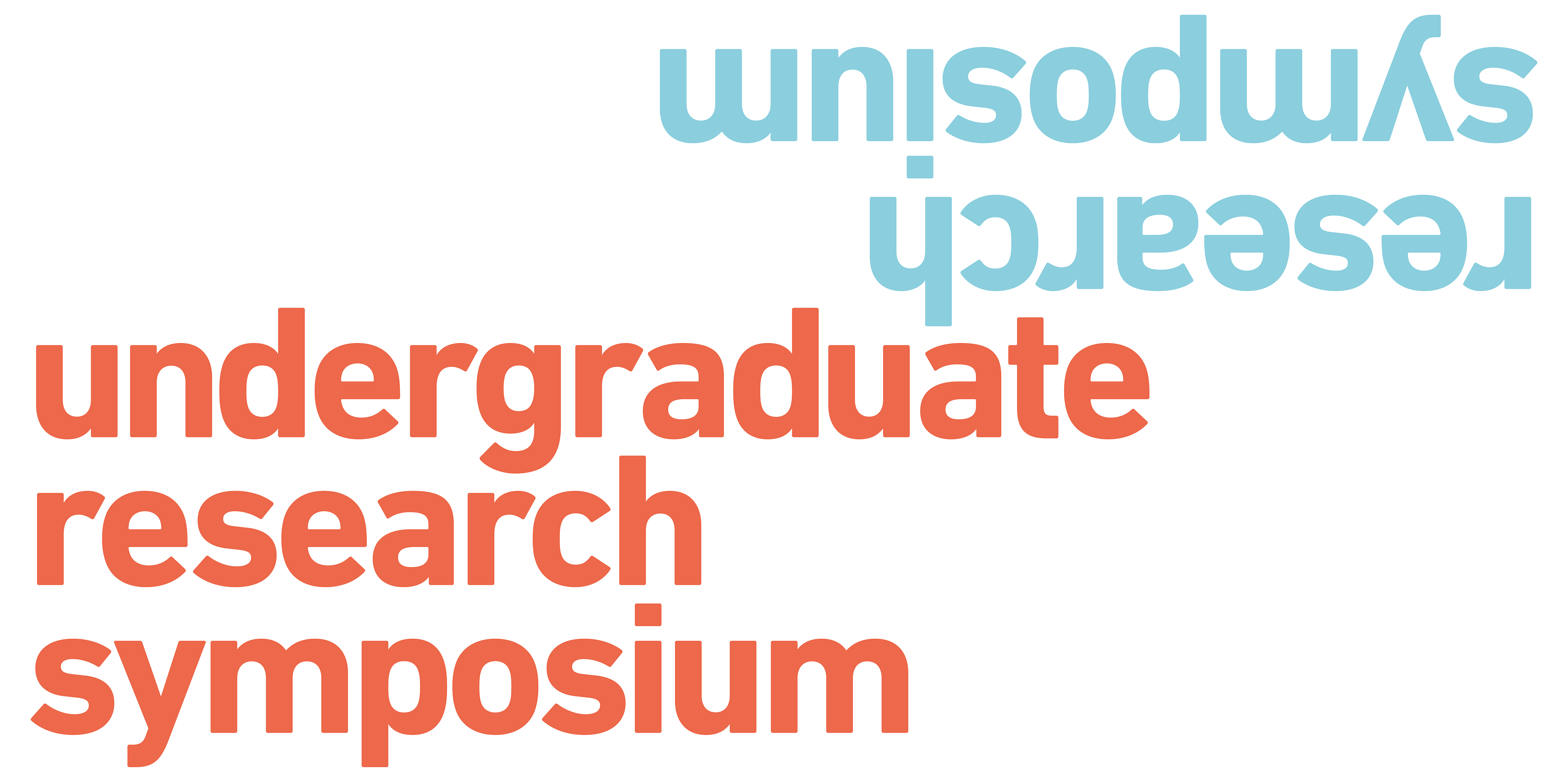

While many elders of our communities live alone or do not have constant support of a person watching over them, an artificially intelligent navigator robot can potentially patrol the living environment to provide consistent activity detection and recognition to alert them of any threats or dangers, call for emergency support if needed, as well as becoming customized to provide assistance or reminders for the user’s daily routines and activities. The focus of this presentation is the process of utilizing various engineering tools with a multidisciplinary approach to the concept of sound activity detection and recognition, as the aural layer of a multi-modal AI-based sensing system developed for elderly care. Therefore, how sound behaves in a physical and natural sense, and its signals through the tools of technology will be first discussed. The knowledge of how the world of AI uses these precisely quantifiable behaviors of sound to detect and recognize various sound events are then presented to build the foundation for the more hands-on side of the research. The Oculus Prime Navigator Robot which is equipped with various sensors such as RGB and depth cameras, as well as a directional microphone array, allow the active real-time detection of human activities in the living environment, which can also be demoed to the audience.

Faculty Supervisor: Dr. Shahram Payandeh, School of Engineering Science, Simon Fraser University

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.