Comparing touchscreen-based tests of pattern separation for rodent models

Main Article Content

Abstract

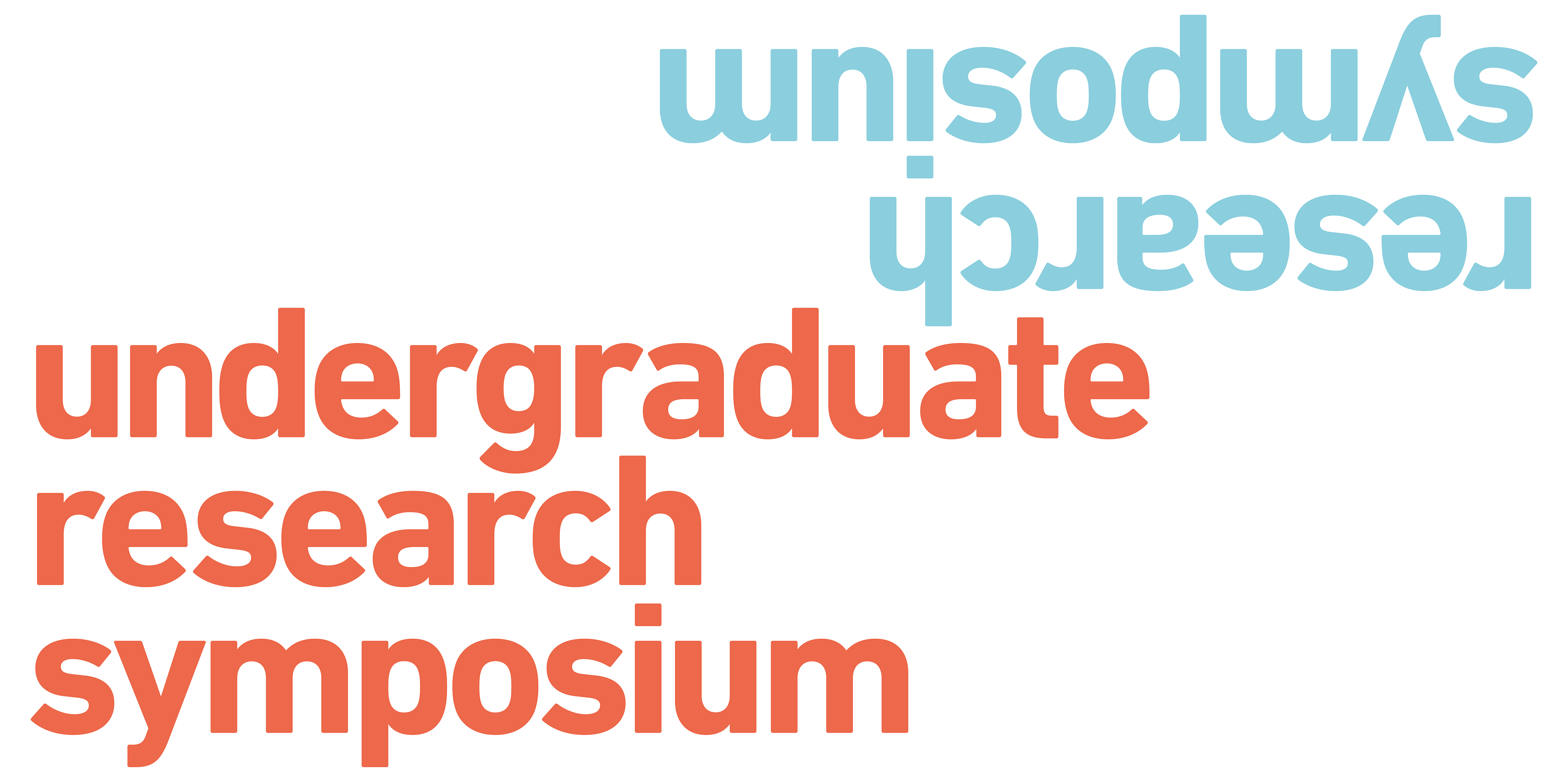

One of the challenges in developing treatments for Alzheimer’s disease (AD) is referred to as the “translational gap” because treatments or findings in rodent models have not successfully translated to effective treatments for humans. Improving the translation of cognitive testing methods used with rodent models is an attempt to shrink this “translational gap”. Researchers have developed touchscreen-based cognitive testing methods for rodents to improve translation between preclinical studies and clinical trials. A translational approach in rodent testing is achieved by simulating the stimuli (images on the screen) and reaction (touch) in human testing methods, such as the Cambridge Neuropsychological Test Automated Battery (CANTAB). Touchscreen training involves animals responding to visual stimuli on a touch-sensitive screen for rewards, relying on rodents' natural exploration tendencies and daily training for task completion.

The hippocampus region of the brain is most vulnerable to Alzheimer’s disease. One of the functions of the hippocampus is pattern separation, which is the process of encoding similar inputs in memory in a way that distinguishes them from one another. There are two touchscreen-based cognitive tests that are designed to evaluate pattern separation: (1) the Location Discrimination (LD) task and (2) the Trial-Unique Non-Matching to Location (TUNL) task. Both tests can be administered in Bussey-Saksida Chambers. In our study, we compare these two touchscreen-based tests of pattern separation, based on data quality, training time, and difficulty.

Faculty Supervisor: Dr. Brianne Kent, Department of Psychology, Simon Fraser University

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.